Detecting polarisation and the spread of mis-and-disinformation on social media

“A lie can travel around the world and back again while the truth is lacing up its boots.”

This quote, and variations of it, have been attributed to several different people over the last few centuries. But the origin of the quote isn't essential. Its message, though, is. Especially now that there is social media.

The spread of mis-and-disinformation on social media is a serious problem, especially during crises like a bushfire or the COVID-19 pandemic. Disinformation is misinformation that’s deliberately posted. But ACEMS researchers are trying to do something about it.

"Some forms of disinformation can cause damage in hours or even in minutes, and it is critical to detect and take action in near real-time,” says Dr Mehwish Nasim, an ACEMS Associate Investigator from Flinders University.

While still at The University of Adelaide, Mehwish began working on this problem with her ACEMS colleagues there, including Chief Investigator Lewis Mitchell, Associate Investigators Jono Tuke and Nigel Bean, and Masters Research Student Tobin South. They began exploring how online social networks form and evolve. These networks allow people to connect with other like-minded people easily. But developing networks with others who think the same or similar can lead to "echo chambers", where people rely on a limited number of authoritative sources of information and quickly reject views from outside their online community.

“This may lead to a polarisation of attitudes regarding social, political and religious issues. Even worse, it may foster extremism, radicalisation and serve as the grounds for spreading misinformation," says Mehwish.

In their pilot study, the team analysed Twitter data from Australia’s same-sex marriage debate in 2017.

“We found two polarised communities in the data. One favouring the hashtag, #VoteYes, and the other favouring #VoteNo,” says Mehwish.

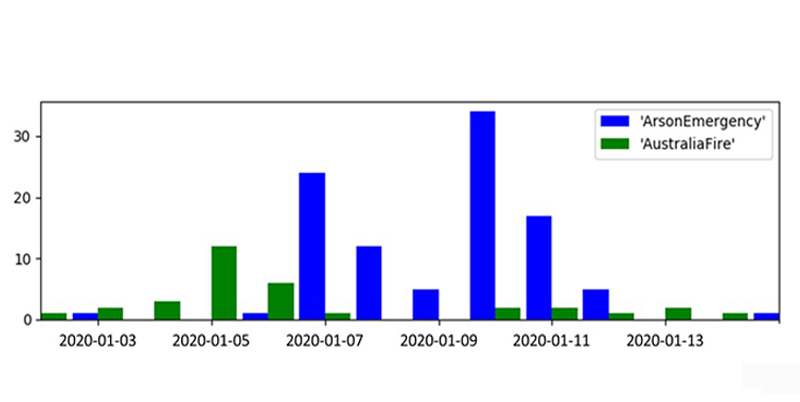

In early 2020, during Australia’s unprecedented bushfire season, the team then analysed the trending hashtag #ArsonEmergency. Supporters of that hashtag discounted claims that climate change was a factor in the fires, instead claiming arson was the main reason.

Counts of tweets using the terms ‘ArsonEmergency’ and ‘AustraliaFire’ without a ‘#’ symbol from 2–15 January 2020 in meta-discussion regarding each term’s use as a hashtag (counts outside were zero).

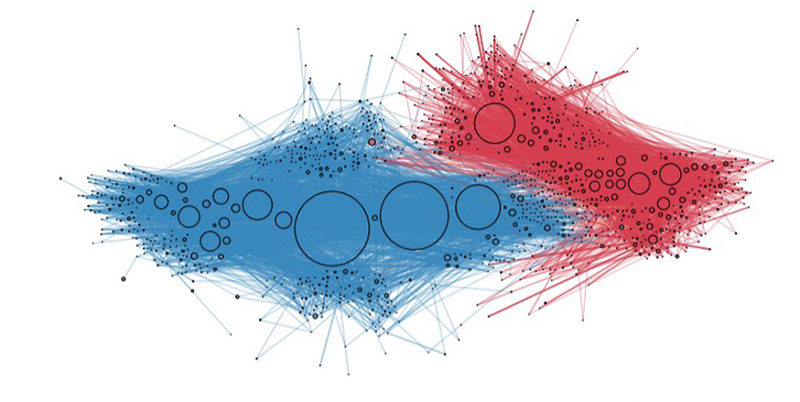

Polarised retweets graph about the arson theory. Left (blue): Opposers, right (red): Supporters of the arson narrative. Nodes represent users. An edge between two nodes means one retweeted the tweet of the other. Node size corresponds to degree centrality.

“The communities that we observed during the same-sex marriage debate aligned very well with the polarised communities during the bushfires. The #VoteNo group aligned with the supporters of the arson theory, and vice-versa,” says Mehwish.

But the team did find one surprising twist. The communities formed during the same-sex and bushfire debates did not align with communities found during the Australian Federal elections. Mehwish believes this may show that polarisation based on partisanship may not perfectly represent peoples’ views on socio-economic issues.

"Our three-year-long analysis revealed these echo chambers were not as 'closed' as we once thought. That offers some hope that concerted outreach to members may reduce these echo chambers," says Mehwish.

Mehwish is now trying to create AI-based models to address the current challenges in detecting misinformation. The models examine user profiles, how users interact on social media, the groups they belong to, and how susceptible they are to sharing misinformation.

She also wants the models to be able to detect ‘bots’. Short for robots, bots are software programs that simulate human interaction on social media. Because they are automated, they operate much faster than humans.

“Bot detection is like trying to hit a moving target since social bots evolve, making them resilient against standard bot detection approaches,” says Mehwish.

Bots are among the more severe threats in the fight against mis-and-disinformation, mainly since most users can’t spot one.

“Mis-and-disinformation campaigns, aided by social media bots and trolls, are constantly trying to encourage large numbers of people to believe and share false narratives. It’s not hard then to see how that can influence a significant portion of the population to believe something that isn’t true,” says Mehwish.

That’s the danger, especially if those narratives lead to bad policies or inaction on an issue.

Mehwish also has two more goals with the models she’s trying to create. First, she wants them to be resilient against missing or erroneous data. Second, they need to be explainable and transparent for the analysts or decision-makers who will use them.

"It's an issue of trust. I want decision-makers to have higher confidence in the results my models will be producing so that they use and act on that information,” says Mehwish.

During 2021, ACEMS provided Mehwish with additional funding via the Centre’s Research Support Scheme and Research Sprint Scheme to extend and fast-track this research with her ACEMS collaborators at The University of Adelaide. Outside of ACEMS, her collaborators are with the Defence Science and Technology Group (DSTG) and The University of Melbourne.